Shengjie XuI am a second-year Computer Science Ph.D. student at the University of Maryland, advised by Professor Ming C. Lin. I received my M.S. in ECE from UC Santa Cruz (co-advised by Prof. Leilani H. Gilpin and Professor James E. Davis) and my B.S. from Hebei University of Technology. From 2011 to 2021, I worked at CATARC, developing computer vision and control systems for autonomous vehicles. Email / CV / Google Scholar / LinkedIn / GitHub |

|

ResearchI'm interested in computer vision, machine learning, graphics, and explainable AI, with a focus on connecting the visual and physical world. Some papers are highlighted. |

|

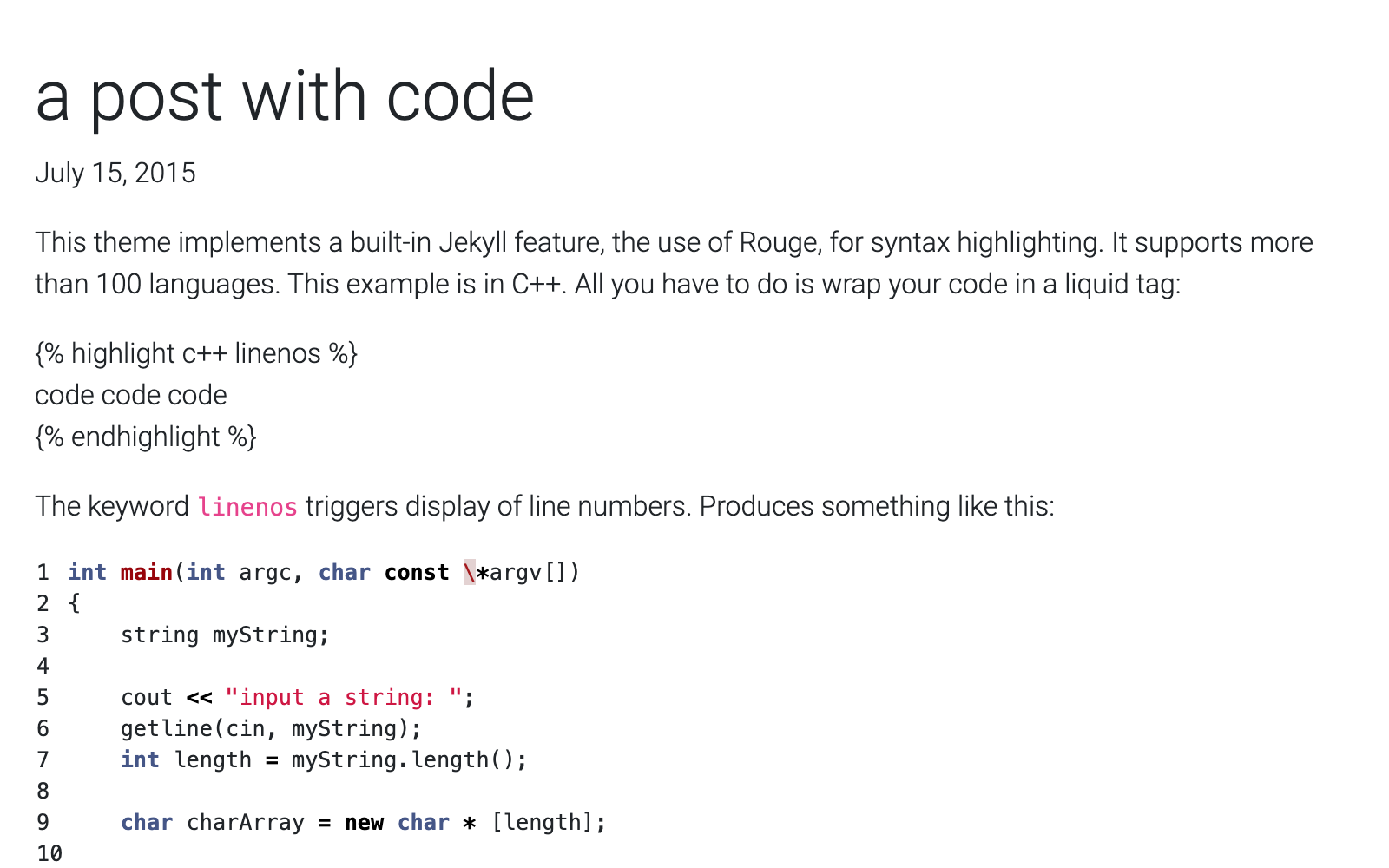

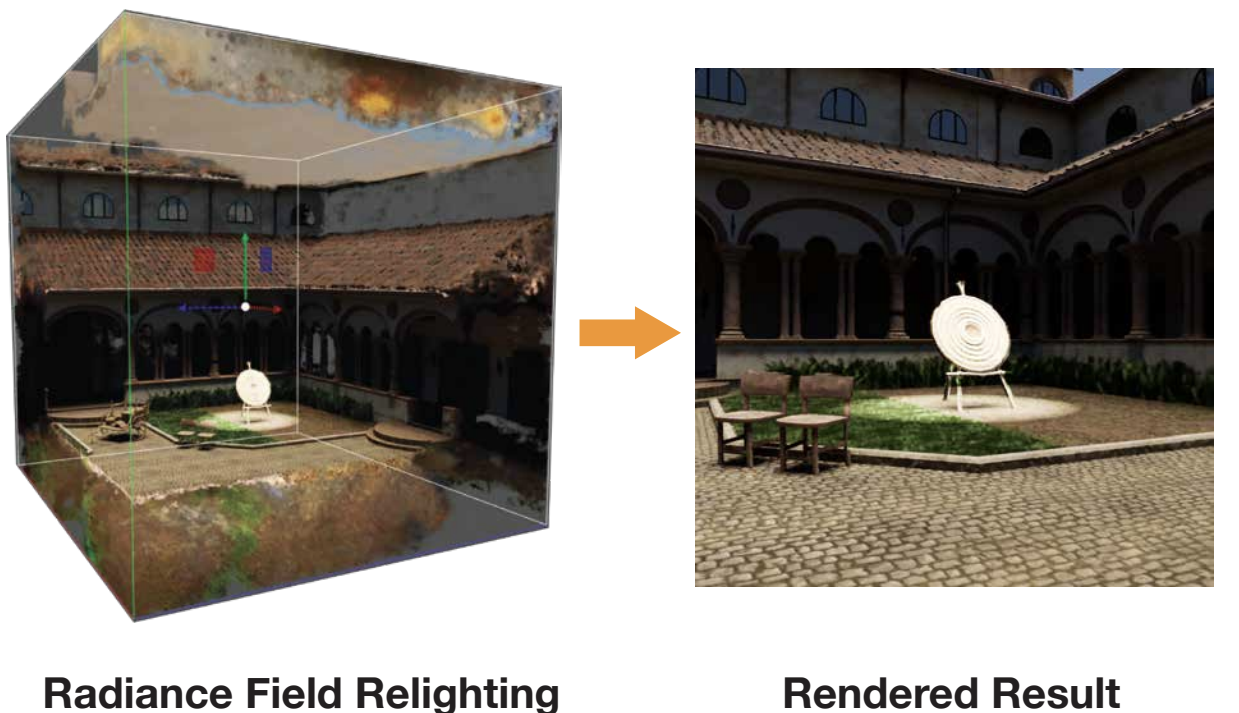

Relight a Video by SVDShengjie Xu, Ming C. Lin arXiv, 2024 code / Incoming. |

|

Event-Driven Lighting for Immersive Attention GuidanceIEEE Virtual Reality (in submission), 2024 We present an attention-guidance framework that leverages LLMs to detect and localize story events from text scripts, then automatically adjusts NeRF-based lighting to enhance user attention and memory retention in VR experiences. |

|

A Framework for Generating Dangerous Scenes for Testing RobustnessShengjie Xu, Lan Mi, Leilani H. Gilpin NeurIPS Workshops, 2022 arxiv / code / slides / website / Autonomous driving datasets like KITTI, nuScenes, Argoverse, and Waymo are realistic but lack faults or difficult maneuvers. We introduce the DANGER framework, which adds edge-case images to these datasets. DANGER uses photorealistic data from real driving scenarios to manipulate vehicle positions and generate dangerous scenarios. Applied to the virtual KITTI datasets, our experiments show that DANGER can expand current datasets to include realistic and anomalous corner cases. |

Other ProjectsThese include coursework, side projects and unpublished research work. |

|

Event Camera 3D Gaussian SplattingUMD CMSC 848B - Computational Imaging 2024-06-01 website / paper / code / This paper, published at CoRL 2024, presents Event3DGS: Event-based 3D Gaussian Splatting for High-Speed Robot Egomotion. Developed as a course project at the University of Maryland, this implementation uses nerfstudio and EventNeRF to train 3D Gaussian Splatting for event camera data. |

|

Infant LocomotionUCSC ECE216: Bio-inspired Locomotion 2022-06-07 paper / Developed a control algorithm using a simple state machine for an infant humanoid, inspired by how babies learn to crawl and walk. The algorithm supports locomotion from crawling to walking stages and was implemented using MuJoCo. Analyzed crawling, a 2D bipedal model, and initial walking from 8 to 18 months, evaluating kinematics and simulation results. |

|

Wolverine CARLA in ThunderhillCATARC 2021-05-12 code / Inspired by Nitin Kapania’s Stanford Ph.D. thesis, Coursera’s Introduction to Self-Driving Cars, and Charlotte Dorn’s Indianapolis waypoint tracking project, this project reimplements Kapania’s path generation algorithm. We tested it by running a high-speed virtual Audi TT on the Thunderhill racetrack, using CALAR to simulate an autonomous vehicle. |

|

Design and source code from Jon Barron's website |